The goal is to engineer a system that can guide users through unfamiliar tasks. To achieve this, I have developed models that, given a procedure such as a recipe or medical protocol, can interpret human behavior from an egocentric perspective, identifying objects, and deducing steps.

|

|---|

| Given a recipe, augmented reality allows us to sense objects in specific states, infer user actions, and provide critical guidance to perform a task. |

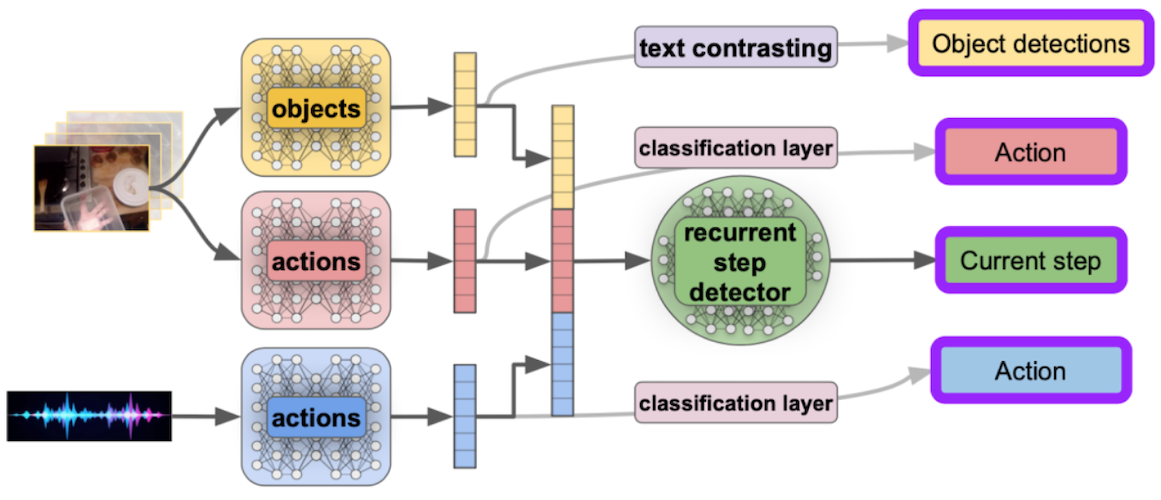

Through this project, I have collaborated with the company Raytheon/BBN, who use my multimodal models that integrate video, audio, and object detections to infer steps in distinct medical procedures. This system has been evaluated by MIT Lincoln Labs and is able to guide paramedics through medical tasks such as applying a tourniquet. Working closely with BBN, we have optimized the model integration in an AR system that can offer real-time feedback to users on potential errors.

|

|---|

| My multimodal deep learning model for step detection in medical procedures. It uses audio-visual representations of actions, objects, and sounds to infer user progress through a task. |

Recently, my work has also expanded to understanding how human behavior can be modeled within AR systems using biometric data, integration functional near-infrared spectroscopy (fNIRS) data to explore cognitive states like perception, attention, and memory during task performance.

© 2024 Iran R. Roman